Artificial Intelligence (AI) has transformed various industries by providing innovative solutions and enhancing efficiency.

Unfortunately, these capabilities are also being misused for harmful purposes, especially in the area of scams.

Fraudsters are leveraging AI-generated content—such as text, images, audio, and video—to craft highly convincing and sophisticated scams.

Here’s a look at how this content is being exploited:

- Deepfake audio and video scams

Deepfake technology employs AI to produce realistic audio and video content that mimics real individuals.

Scammers exploit this technology to trick victims into thinking they are communicating with someone they know and trust, like a family member, friend, or colleague.

Examples include:

- Voice Cloning: Scammers replicate a loved one’s voice to call victims, claiming they are in trouble and urgently need financial help.

- Fake CEO Videos: Fraudsters generate videos of corporate executives directing employees to transfer money or disclose sensitive information.

- Celebrity Endorsements: Deepfake videos featuring celebrities are used to promote fraudulent investment schemes or products.

- Fake social media profiles and interactions

AI can create fake social media profiles that include realistic photos (generated by AI image creators) and believable backstories.

These profiles are designed to gain the trust of victims gradually, often resulting in romance scams or fraudulent investment schemes.

For example, romance scammers develop false identities to forge emotional bonds with victims before soliciting funds.

Additionally, fake influencers advertise bogus products or promote pyramid schemes.

- Impersonation and identity theft

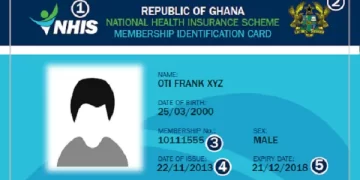

AI-generated content is being increasingly exploited for identity theft.

Fraudsters can produce fake IDs, passports, and other documents using AI tools, which they subsequently use to open bank accounts, apply for loans, or engage in various other fraudulent activities.

- Manipulation of public opinion

Although not a direct scam, AI-generated content is utilized to disseminate misinformation and sway public opinion, often with financial or political intentions.

For instance, false news articles or social media posts can be employed to direct traffic to scam websites or manipulate stock prices.

- Investment and crypto scams

AI-generated content is frequently utilized to promote fraudulent investment schemes, especially in the cryptocurrency sector.

Fraudsters produce fake testimonials, whitepapers, and even AI-generated “experts” to entice victims into investing in non-existent or worthless assets.

ICYMT: Independence Day Celebration at Jubilee House Will Save 95% of Estimated Costs – Mahama

- Phishing scams with AI-generated text

AI-driven language models, like ChatGPT, can produce highly realistic and personalized emails, messages, or social media posts.

Scammers leverage these tools to create phishing emails that resemble genuine communications from banks, government agencies, or reputable companies.

The content is typically free of grammatical mistakes and customized for the recipient, making it more difficult for individuals to recognize the scam.

For instance: fraudulent customer support messages alleging unauthorized transactions and emails impersonating colleagues or executives asking for urgent payments.

- AI-generated fake websites and reviews

AI tools can generate fake websites that closely resemble legitimate businesses, featuring AI-generated product descriptions and customer reviews.

These sites are designed to sell counterfeit goods, steal payment information, or deceive users into downloading malware.

Moreover, AI can create fake reviews to enhance the credibility of fraudulent products or services, complicating the task for consumers to differentiate between authentic and fake offerings.

SOURCE: PULSE GHANA