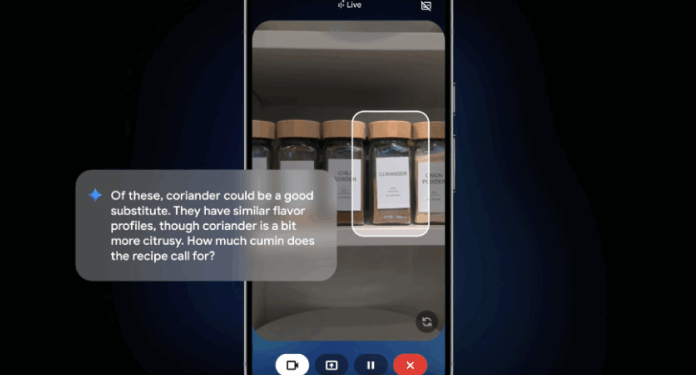

Google is set to enhance its Gemini Live AI assistant with a range of new features, including the ability to interact with your phone, messaging, and clock apps. Starting next week, Gemini Live will highlight items directly on your screen while sharing your camera feed, making it easier for the assistant to identify specific objects.

For example, if you’re searching for the right tool for a project, you can point your smartphone’s camera at your tools, and Gemini Live will highlight the correct one on your display. This feature will debut on the newly announced Pixel 10 devices, launching on August 28. Google will also roll out visual guidance to other Android devices at the same time, with plans to expand to iOS in the coming weeks.

Additionally, Google is introducing new integrations that will allow Gemini Live to work with more apps, such as Messages, Phone, and Clock. If you’re discussing directions with Gemini and realize you’re running late, you can interrupt the conversation by saying, “This route looks good. Now, send a message to Alex that I’m running about 10 minutes late.” The AI will then draft a text message for you.

ICYMI: Basketball to kick off ahead of LA 2028 Olympics opening ceremony

Finally, Google is updating the audio model for Gemini Live, claiming it will “dramatically improve” the chatbot’s use of key elements of human speech, including intonation, rhythm, and pitch. Gemini will soon adjust its tone based on the subject matter, using a calmer voice for stressful topics.

Users will also have the option to control the speed of Gemini’s speech, similar to how ChatGPT allows adjustments in voice style. If you request a dramatic retelling of a story from a specific character’s perspective, the assistant may adopt an accent to create a more engaging narrative.

SOURCE: THE VERGE