An AI-driven system may soon handle the evaluation of potential harms and privacy risks for up to 90% of updates to Meta apps like Instagram and WhatsApp, according to internal documents reportedly reviewed by NPR.

A 2012 agreement between Facebook (now Meta) and the Federal Trade Commission mandates privacy reviews for product updates, which have primarily been conducted by human evaluators.

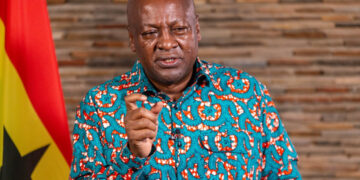

ICYMI: Mahama Unveils Bold Plan to Reopen Bond Markets, Link Borrowing to Viable Projects

Under the new system, product teams will fill out a questionnaire about their work and typically receive an “instant decision” from AI regarding identified risks, along with requirements for updates or features before launch.

This AI-focused approach aims to accelerate product updates, but a former executive expressed concerns to NPR, stating it may pose “higher risks” since negative consequences from changes might not be addressed proactively.

In a statement, a Meta spokesperson noted the company has invested over $8 billion in its privacy program and is committed to delivering innovative products while meeting regulatory requirements.

“As risks evolve and our program matures, we enhance our processes to better identify risks, streamline decision-making, and improve user experience,” the spokesperson added. “We use technology to ensure consistency in low-risk decisions while relying on human expertise for thorough assessments of complex issues.”

SOURCE: TECH CRUNCH